Unlock Powerful Insights from Enterprise Data

From cloud apps and data warehouses to global ERP landscapes, we help leading businesses connect, integrate and analyze data across their organizations.

Magnitude is now part of insightsoftware, – a leading reporting, analytics, and enterprise performance management software provider. Read the press release.

Inflexible Legacy Systems Obscure Vital Insights

Scattered Legacy Data Holds Back Cloud Migration

Many companies struggle to achieve the anticipated value from their cloud initiatives. With so many technologies to navigate, data sources can become more scattered, and proprietary legacy technologies require deep expertise to enable cloud-native development.

Managing Disparate Data Connectors Stifles Innovation

How can you get to market faster, increase customer adoption, and drive innovation when all your time is tied up with sourcing, maintaining, and managing ad-hoc data drivers from multiple vendors or using APIs to deliver data access?

Legacy Applications No Longer Fit for Purpose

Legacy applications and ERP systems have evolved over decades to support sophisticated processes but are now seen as a complex maze of hybrid technologies that just end up holding back progress.

Solutions for the Data-Driven Enterprise

Faster Pathways To the Cloud

From improving analytics to acting on new opportunities and customer demand, the sky is the limit when you move to the Cloud. Transform aspirations into reality with a database connectivity platform that integrates cloud data warehouses and data lakes, SaaS apps, and NoSQL platforms to help you bring together data from your hybrid cloud environments. Improve time-to-value of complex, ERP-to-the-cloud initiatives with context-rich and intuitive business data models and analytics tools that provide a single view for reporting against on-premises and cloud-hosted enterprise applications.

Connect To Any Data Source

Make the most of your application or data platform with a data connectivity solution that’s dependable, scalable, and customizable. Each connector is meticulously optimized for performance and to enable standards-based connectivity to your customers’ data sources. We invented the ODBC standard in partnership with Microsoft 20 years ago, and we’ve been progressing the world of data connectivity ever since. Work with technology that is trusted by 100 percent of the Gartner BI and Analytics Magic Quadrant leaders.

Fast-track Your ERP Modernization

Smooth your transformation to the Cloud with analytics and integration solutions that support your ERP modernization, whether you’re lifting and shifting or re-imagining how your business operates on Oracle Cloud Applications or SAP S/4HANA. Use automation to accelerate business data input and output associated with transformation programs. Eliminate the need to write custom interfaces with ready-to-deploy application integration supporting 16 different ERP platforms and more than 60 database providers.

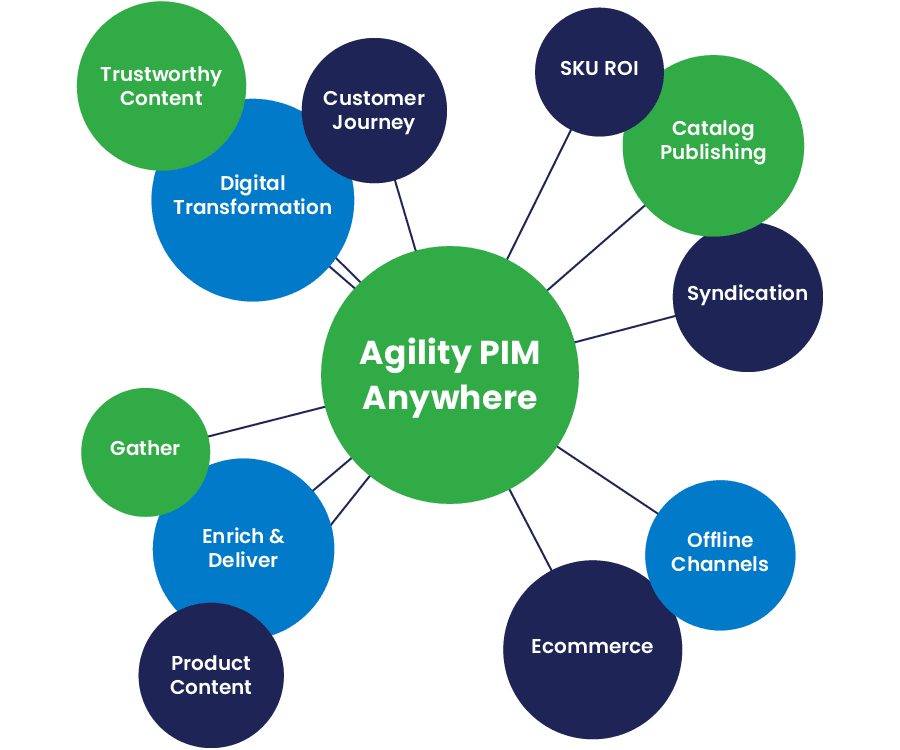

Agility PIM

Take Charge of Product Data

Agility PIM helps organizations across all industries to effectively manage their product information. Get products to market faster with a simple-to-use, comprehensive Product Information Management (PIM) solution that efficiently supports commerce across digital and traditional channels.

Angles for Oracle

Unlock Oracle Data for Powerful Business Insights

Angles for Oracle (formerly Noetix) delivers a context-aware, process-rich business data model, a library of 1,800 pre-built, no-code business reports, and a high-performance process analytics engine for Oracle Business Applications, including EBS and OCA. Unlock your enterprise data to uncover actionable insights so you can act decisively in an uncertain and quickly changing world.

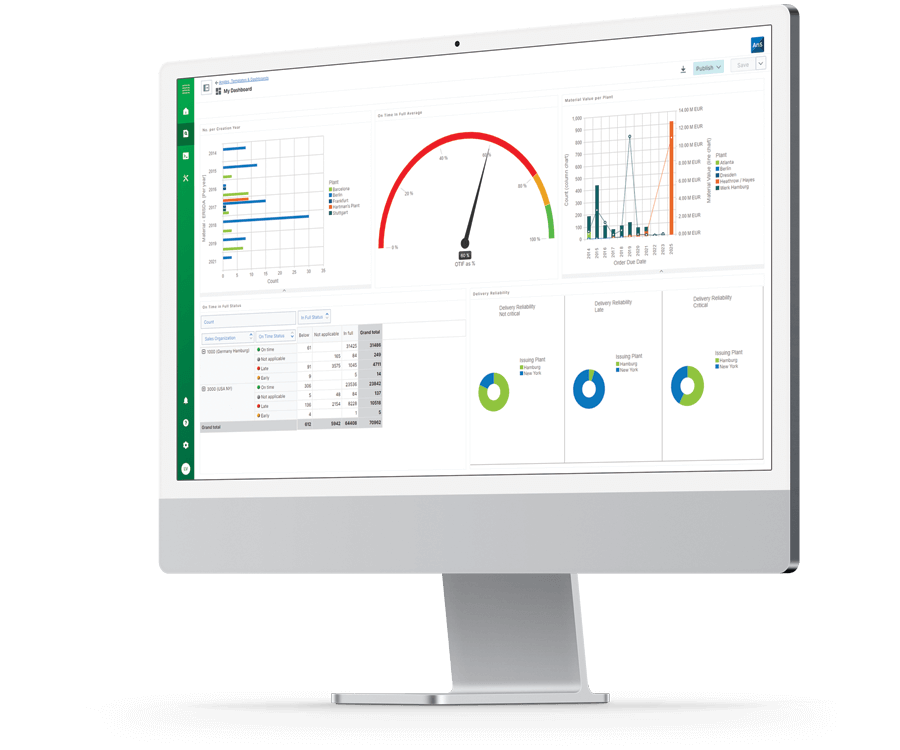

Angles for SAP

Transform SAP Data Into Actionable Insights

Angles for SAP (formerly Every Angle) transforms and enhances your critical data from SAP ERP tools (including ECC and S/4HANA), turning it into actionable insights. Put the power of operational analytics and business intelligence into the hands of the people who need it most – your business users.

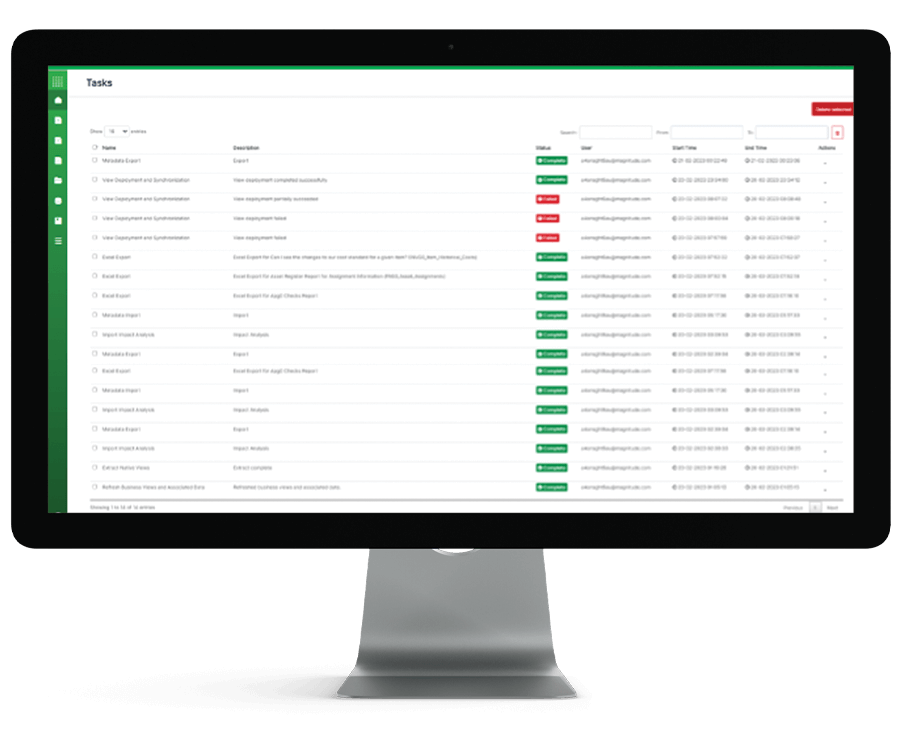

Process Runner

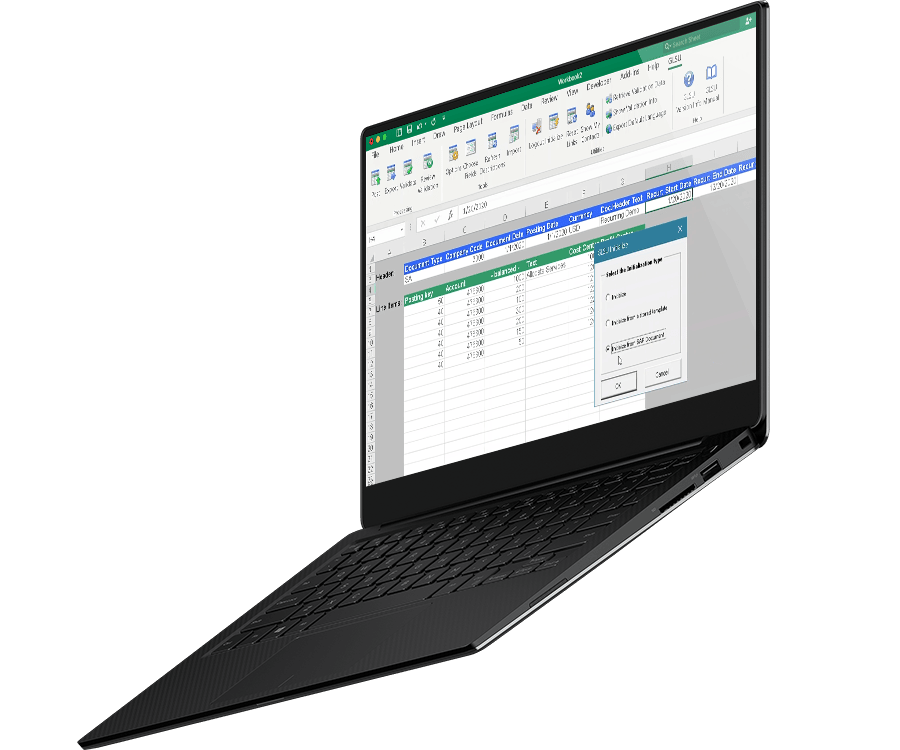

Powerful SAP Data Automation. Faster

Process Runner turns Microsoft Excel into your data management cockpit for SAP with no-code transaction automation, robust data management, and flexible workflow features that make working in any business process faster and easier. Discover how SAP data automation can change your business.

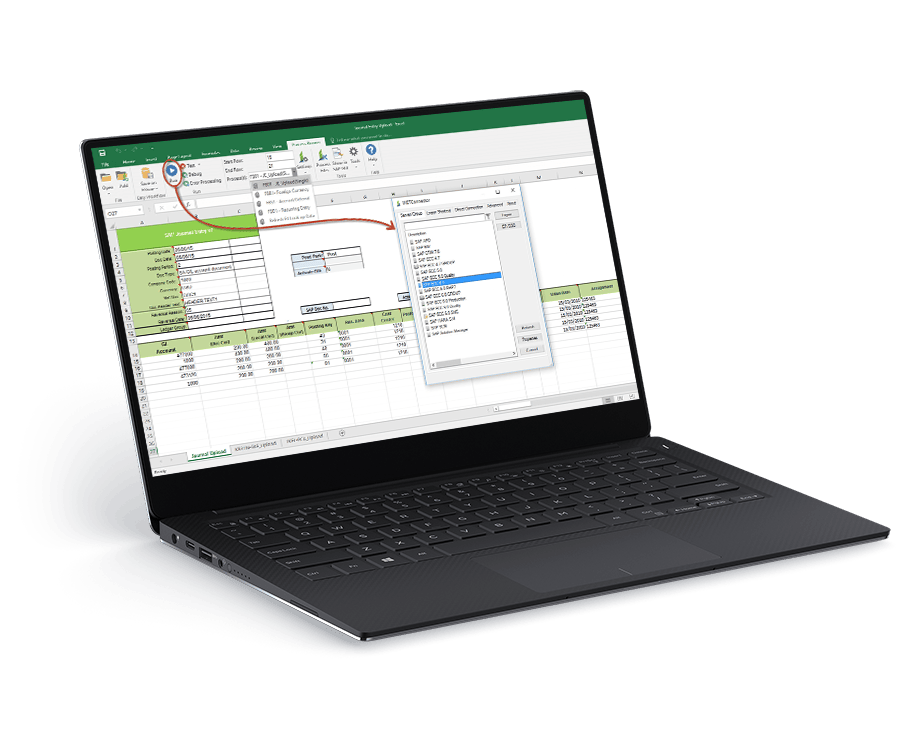

Process Runner GLSU

Automate SAP Financial Data Entry using Microsoft Excel

From simple recurring journal entries to allocations thousands of lines long, Process Runner GLSU (formerly Z Option GLSU) provides a flexible, intuitive interface for SAP data entry and transaction posting directly from Microsoft Excel. Empower your Finance team to work faster and smarter with powerful automation and pre-post data validation features.

Simba

Simplify access to data across applications and data platforms

Simba is a complete data connectivity solution portfolio delivering data access to and from applications, data platforms, databases – virtually any data source – efficiently and effectively. Connect across all your data sources with scalable, enterprise-grade data connectors, the Simba SDK, and managed services offering provides you with custom-built connectivity solutions through testing and certification.

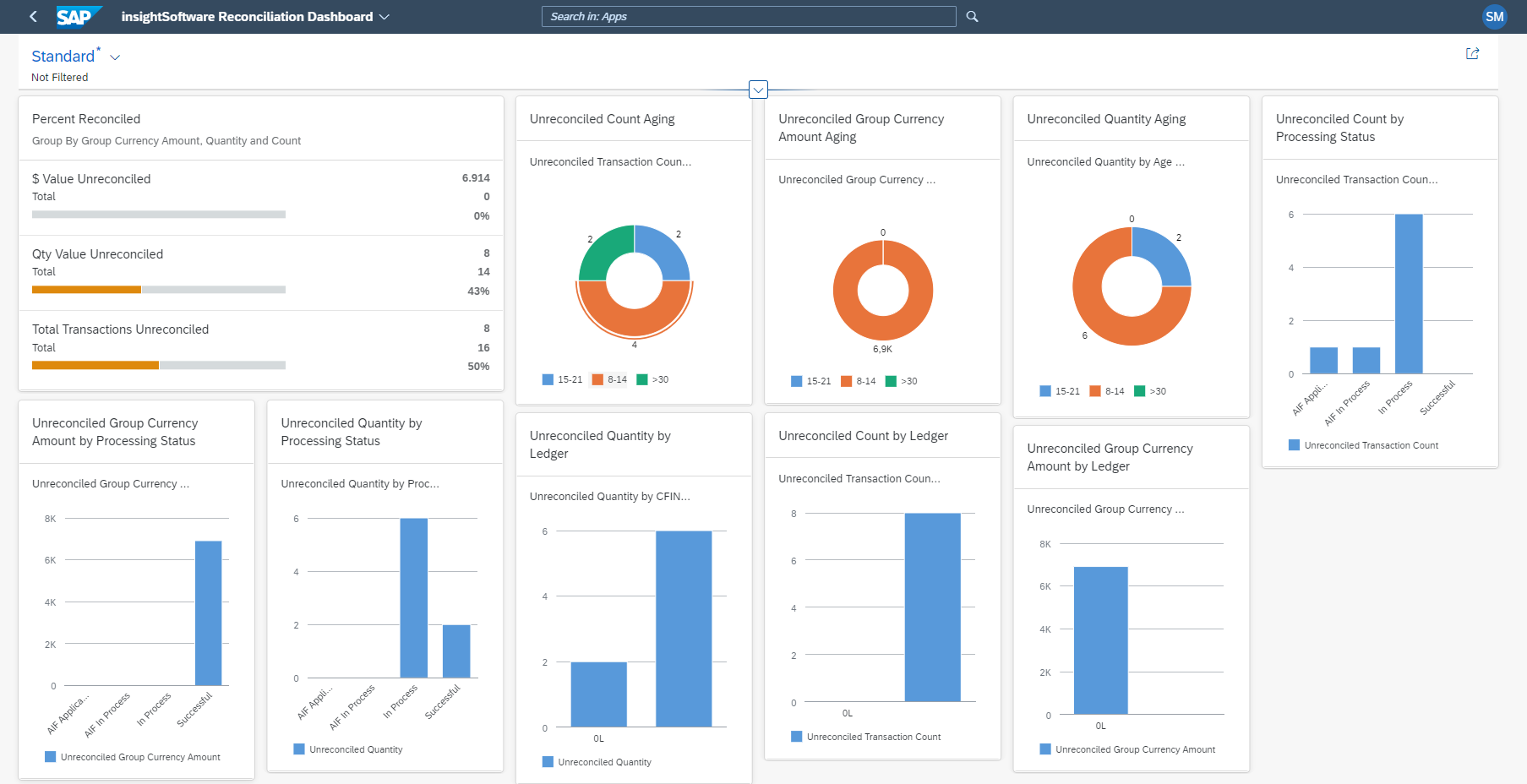

SAP Central Finance Solutions

Enable Faster & Better SAP Finance Implementations

We deliver faster, better, and more cost-effective SAP Central Finance implementations by enabling and streamlining third-party data integration. Our Central Finance solutions are globally available through SAP as SAP Solution Extensions.

Integrates with:

Support Community

My Account

Review your open support tickets, access user documentation, product updates and more.

Request an Account

Are you a new customer in need of a Support Community account? Find your product below to get started.

Speak to an Expert